Cognosa Capabilities: LLM access options

Open Source or Proprietary Large Language Models

Queries With — or Without — Context

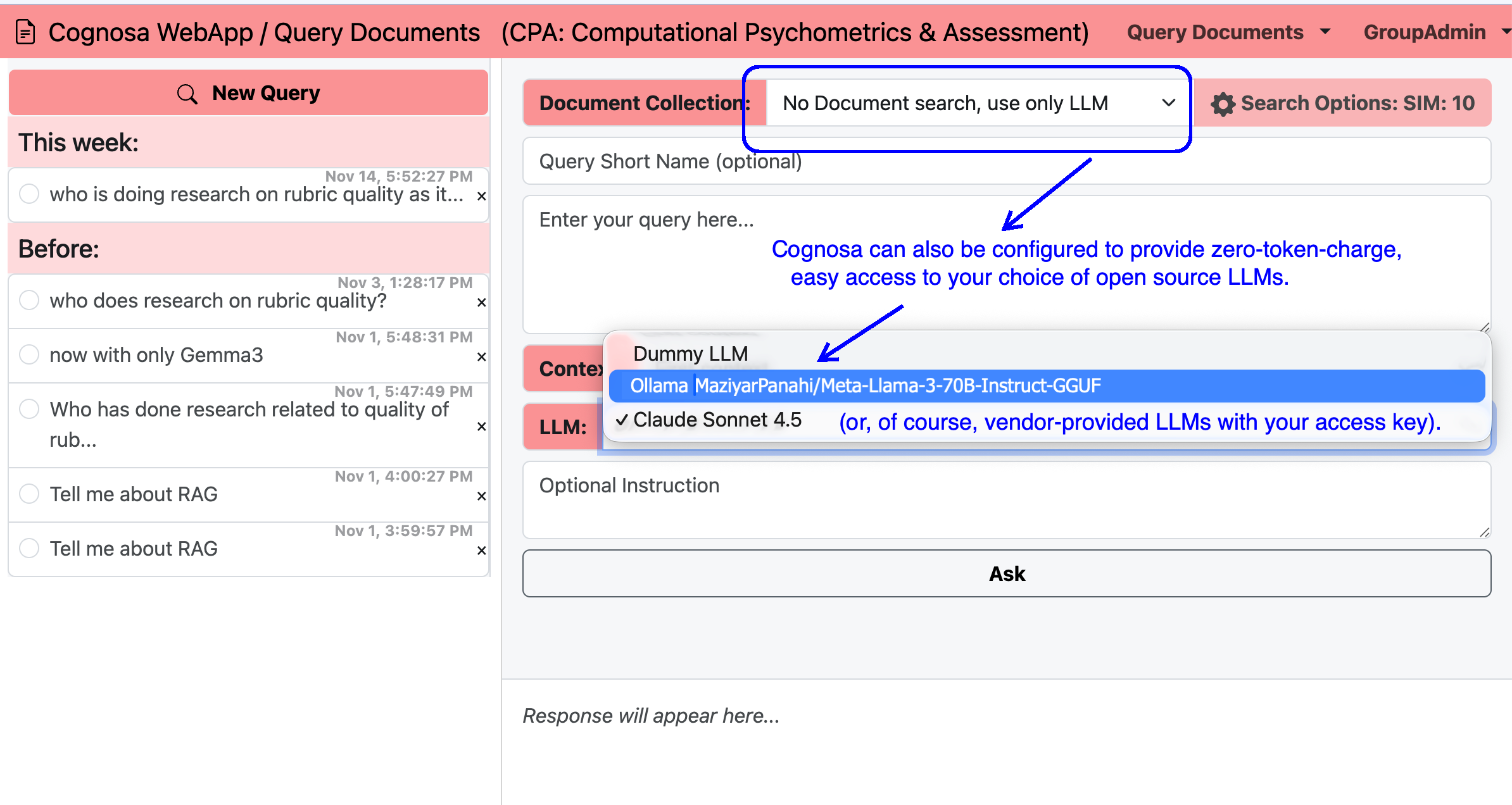

Cognosa supports two complementary ways of using AI. First, users can ask questions directly to a large language model, much like using a standard chatbot. Second—and far more powerful—users can query an LLM that is enhanced with organization‑specific data collections they are authorized to access. Both modes work with either free, open‑source models (no per‑token charges) or commercial models such as those from Anthropic or OpenAI, using the customer’s own API key.

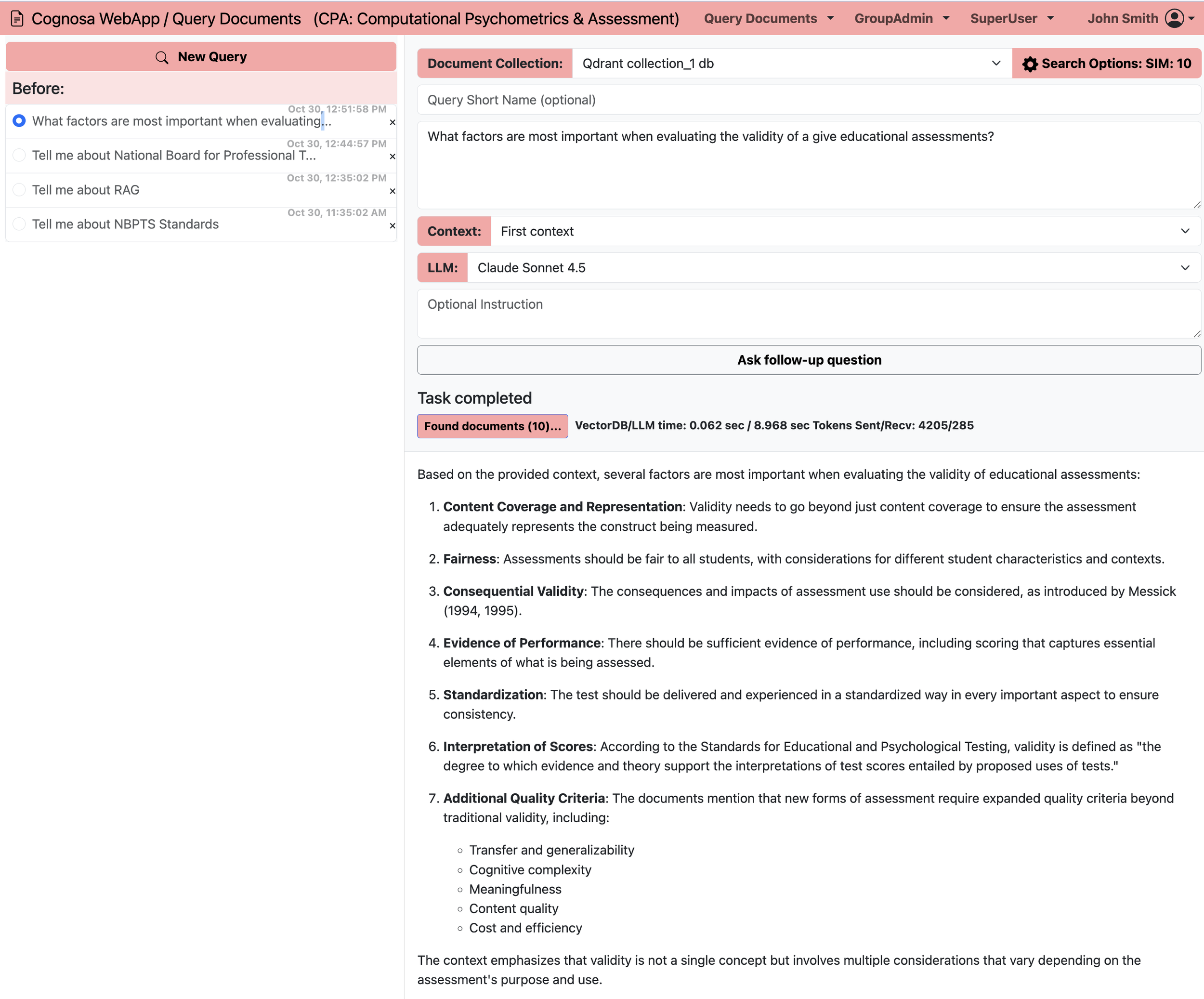

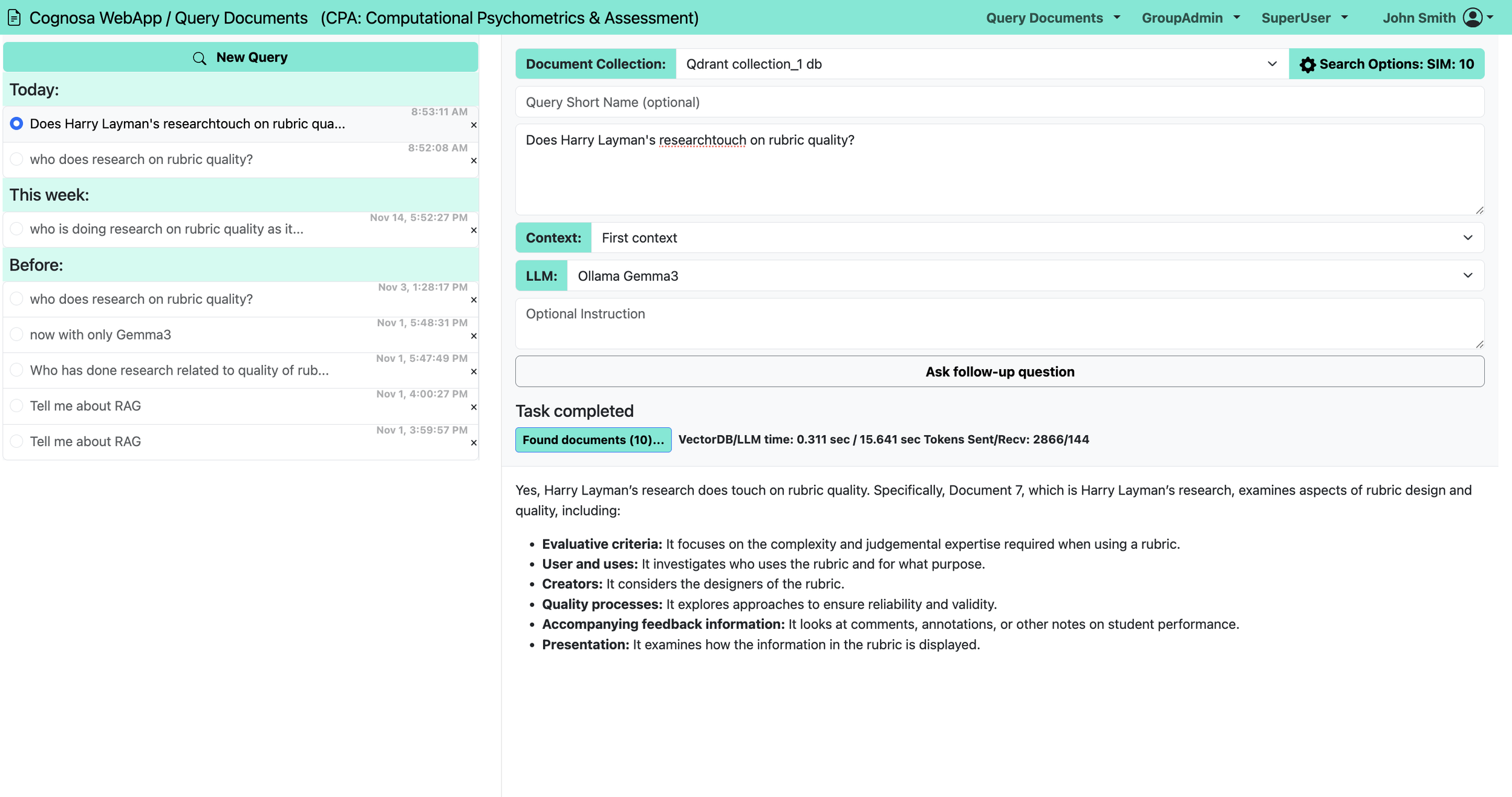

Augmented Queries with Company Data

Organizations often maintain multiple data collections: industry research, regulatory guidance, internal policies, customer service resources, product documentation, and more. Cognosa can connect any or all of these collections to an LLM, giving users precise, context‑rich answers. In the example shown, this Cognosa workspace offers access to an open‑source Llama‑3 70B model (optimized for accuracy and speed) or Anthropic’s Claude 4.5 Sonnet model. Queries sent to Claude generate charges directly to the customer’s Claude API account, while Cognosa saves and logs all queries and responses for later review.

Illustrative Token Costs

As of this writing, Anthropic’s pricing for the Sonnet 4 family (200k‑token context window) is approximately:

• $3 per 1M input tokens

• $15 per 1M output tokens

A typical context‑augmented query may include about 3,000 input tokens (a short question plus relevant retrieved context) and return about 1,000 output tokens. At six such queries per workday, this usage comes to roughly 126.72 USD per month—regardless of whether it is one person making multiple queries or several users making fewer queries each.

By contrast, a direct LLM query (no contextual augmentation) might use only 450 tokens in and 350 tokens out, totaling an estimated 34.85 USD per month for the same number of daily queries.

Examples

The figures below shows how Cognosa displays both types of queries:

a) direct questions to an LLM, and

b) augmented questions answered using your organization’s specialized data collections.