Semantic Chunking

Or, more simply: how smart, customized RAG solutions deliver better answers

It probably won’t surprise anyone that a consultancy focused on semantic technologies believes that semantic decomposition — or the more headline-friendly term semantic chunking — really matters. Whether you’re building a generative AI assistant or a more traditional analytics workflow, better decisions usually come down to having the right information at the right moment. That might mean real-time sensor data driving an automated process, or it might mean helping a human answer a detailed question about a product, regulation, or technical system. In either case, identifying the relevant context quickly and accurately is what makes the answer useful.

For small or tightly scoped knowledge bases, this isn’t hard. A simple chatbot can scan most or all of the available material and produce a reasonable response. But once you’re dealing with hundreds or thousands of pages — manuals, specifications, discussions, updates, and historical notes — brute-force approaches stop working. You can’t realistically pass all that material to an LLM every time, and retraining a custom model is expensive, slow, and often impractical when the data is changing.

This is exactly why Retrieval-Augmented Generation (RAG) has become so popular. Instead of training the model on everything, you retrieve just the most relevant context and use that to support generation. But this is also where many RAG systems start to struggle. If your documents are simply chopped into uniform blocks and indexed, the retrieved “chunks” can end up being a kind of word salad — loosely related text that looks relevant on the surface but doesn’t actually answer the question being asked.

That’s where semantic chunking comes in. On the Cognosa AI-as-a-Service platform, we load data in a way that tries to preserve meaning, not just token counts. The goal is to capture complete ideas — instructions, definitions, explanations, constraints — at a level of detail that makes sense for each source. A 300-page user manual needs to be handled very differently from a collection of recipes or short biographies. Product catalogs introduce their own challenges, especially when many items share similar attributes and options and the system must not mix them up.

You’ll often hear people talk about different “chunking strategies,” and a quick search will turn up plenty of debates about which approach is best. But when you step back, most of these discussions are really circling the same principle: keep meaningful, coherent information together, and don’t break associations that matter. The specifics vary by use case and data source, but the underlying goal is the same — make it easy for the system to retrieve context that actually helps answer the question.

There are, of course, many ways to implement this. You can describe semantic chunking in algorithmic terms, or focus on whether the parsing is done with LLMs, traditional NLP tools, rule-based approaches, or some hybrid of all three. Those choices matter for cost, performance, and reliability, but they’re ultimately tactical. The strategy is not “use an LLM” or “use a specific algorithm”; the strategy is to organize knowledge in a way that consistently supports high-quality answers.

In the real world, data is rarely neat or homogeneous. A collection of journal articles or a large email archive is about as simple as it gets. Most enterprise knowledge bases are far messier: user manuals, standards and regulations, technical specifications, customer correspondence, chat logs, spreadsheets, diagrams, tables, and scanned documents all mixed together. Making this usable requires organizing content based on what it means and how it relates to likely questions — not just where it appears in a file.

At CogWrite, we’ve built and refined RAG collections using a range of tools and techniques across many projects. We maintain a growing library of ingestion routines and have created vector databases for different types of domain-specific AI solutions. This allows us to adapt quickly while still tailoring the approach to the data and the problem at hand.

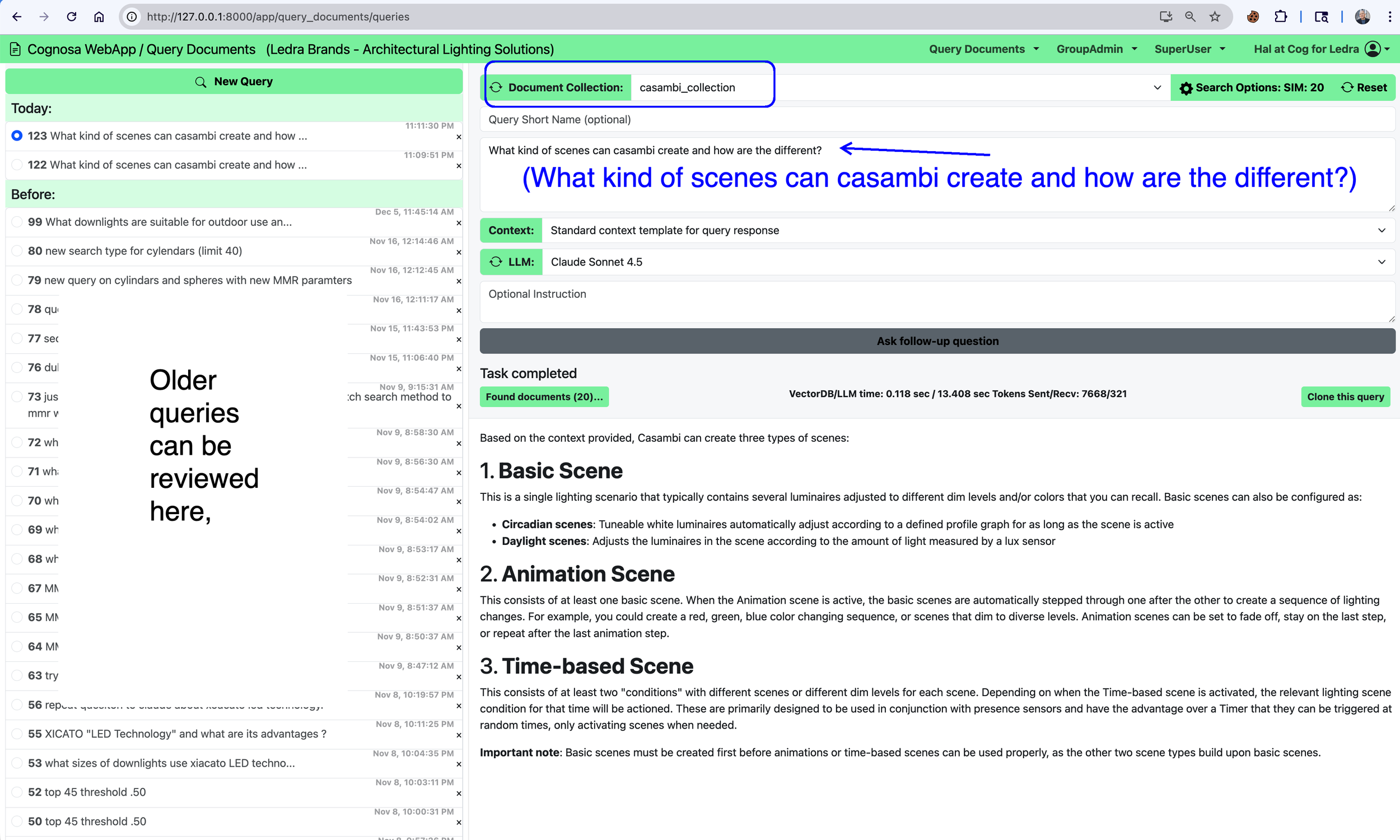

One example is our work around Casambi, an open-source, low-energy Bluetooth lighting control protocol. There’s a wealth of publicly available material about Casambi — documentation, specifications, guides, presentations, and discussions — but it exists in many formats and levels of detail. We built a Casambi document collection specifically to support a question-and-answer workflow, and it has become one of our internal testbeds for experimenting with more sophisticated semantic chunking techniques and retrieval strategies.

A sample query and response from that system is shown below.

Cognosa Capabilities: LLM access options

Open Source or Proprietary Large Language Models

Queries With — or Without — Context

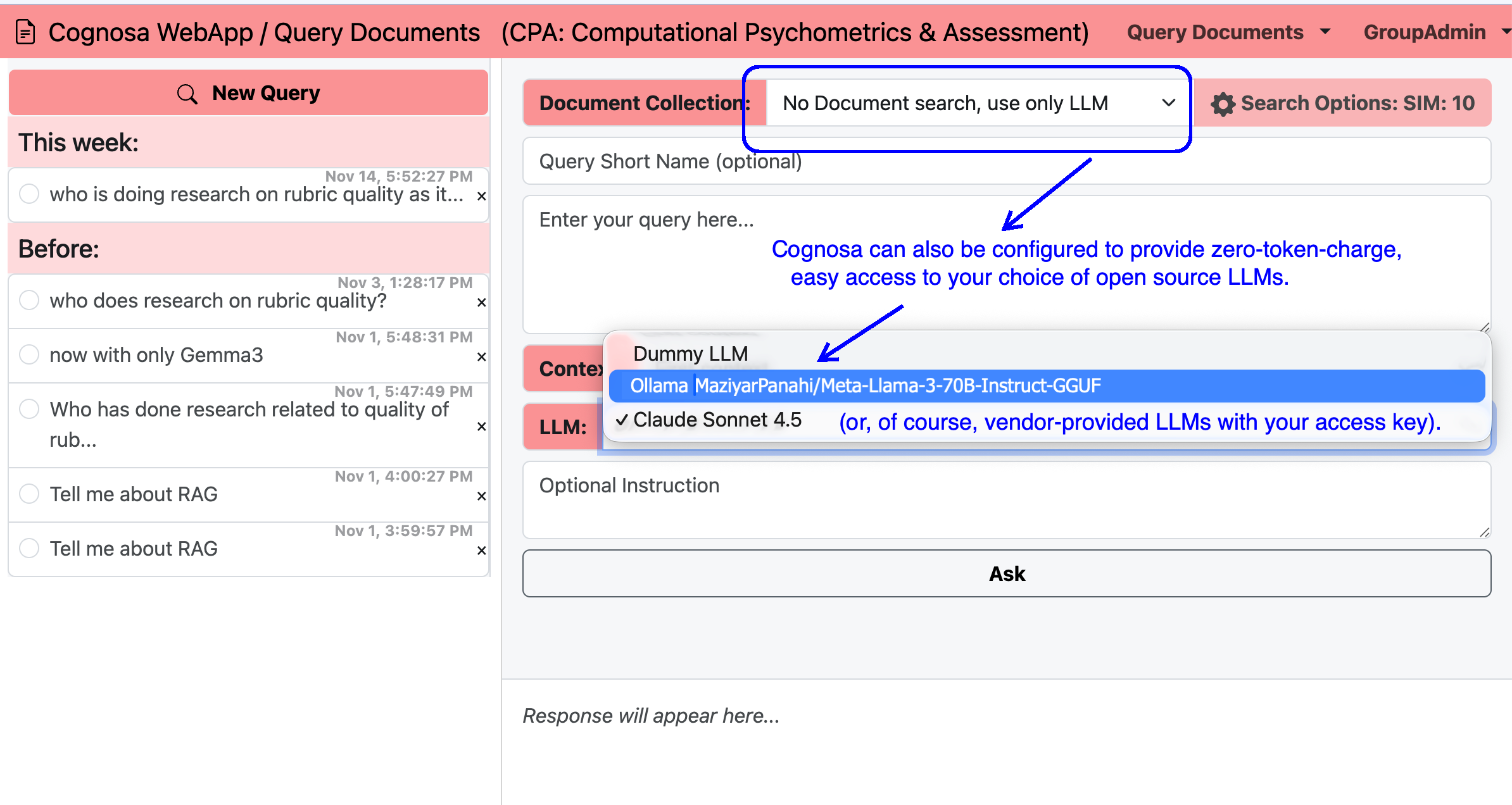

Cognosa supports two complementary ways of using AI. First, users can ask questions directly to a large language model, much like using a standard chatbot. Second—and far more powerful—users can query an LLM that is enhanced with organization‑specific data collections they are authorized to access. Both modes work with either free, open‑source models (no per‑token charges) or commercial models such as those from Anthropic or OpenAI, using the customer’s own API key.

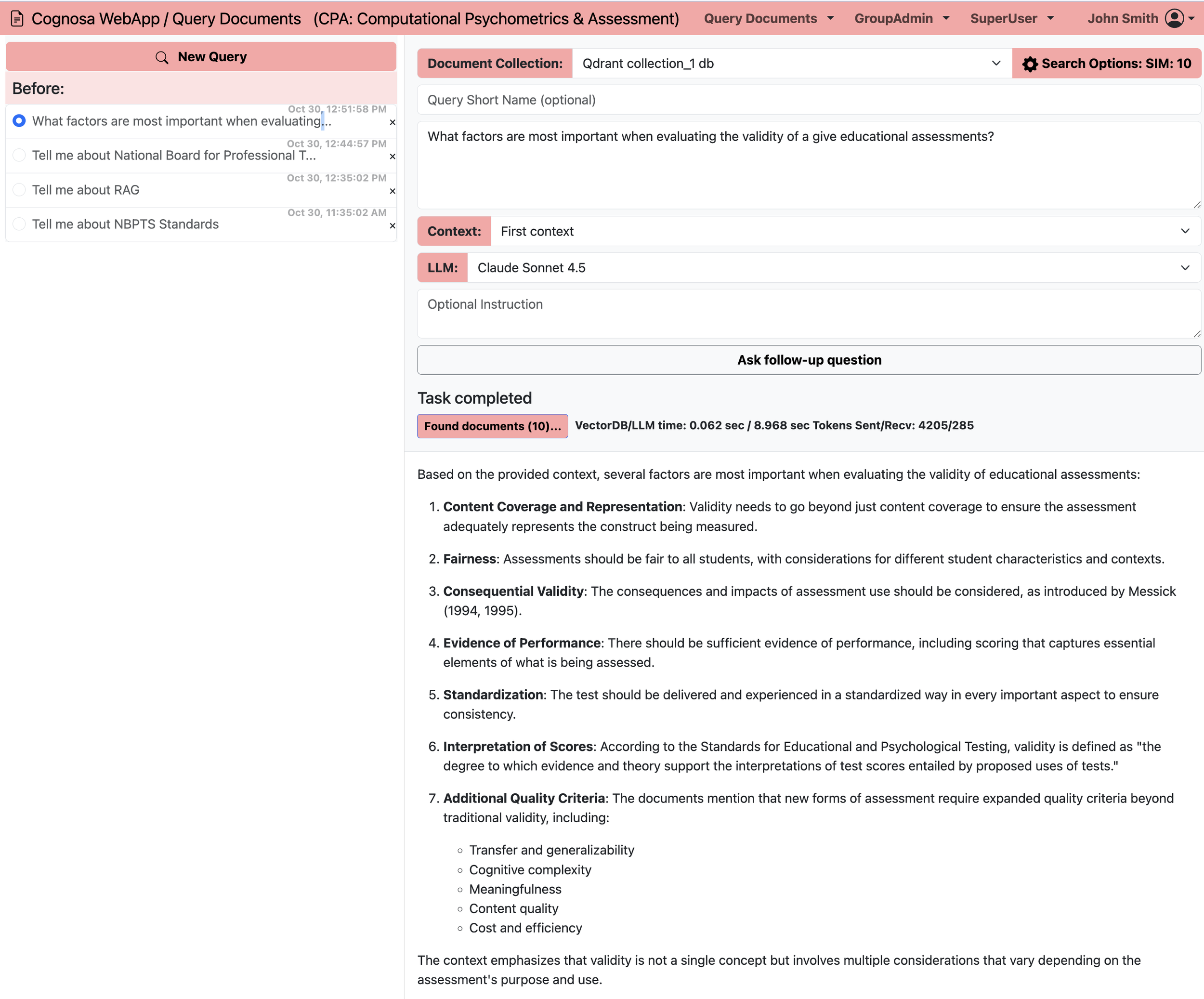

Augmented Queries with Company Data

Organizations often maintain multiple data collections: industry research, regulatory guidance, internal policies, customer service resources, product documentation, and more. Cognosa can connect any or all of these collections to an LLM, giving users precise, context‑rich answers. In the example shown, this Cognosa workspace offers access to an open‑source Llama‑3 70B model (optimized for accuracy and speed) or Anthropic’s Claude 4.5 Sonnet model. Queries sent to Claude generate charges directly to the customer’s Claude API account, while Cognosa saves and logs all queries and responses for later review.

Illustrative Token Costs

As of this writing, Anthropic’s pricing for the Sonnet 4 family (200k‑token context window) is approximately:

• $3 per 1M input tokens

• $15 per 1M output tokens

A typical context‑augmented query may include about 3,000 input tokens (a short question plus relevant retrieved context) and return about 1,000 output tokens. At six such queries per workday, this usage comes to roughly 126.72 USD per month—regardless of whether it is one person making multiple queries or several users making fewer queries each.

By contrast, a direct LLM query (no contextual augmentation) might use only 450 tokens in and 350 tokens out, totaling an estimated 34.85 USD per month for the same number of daily queries.

Examples

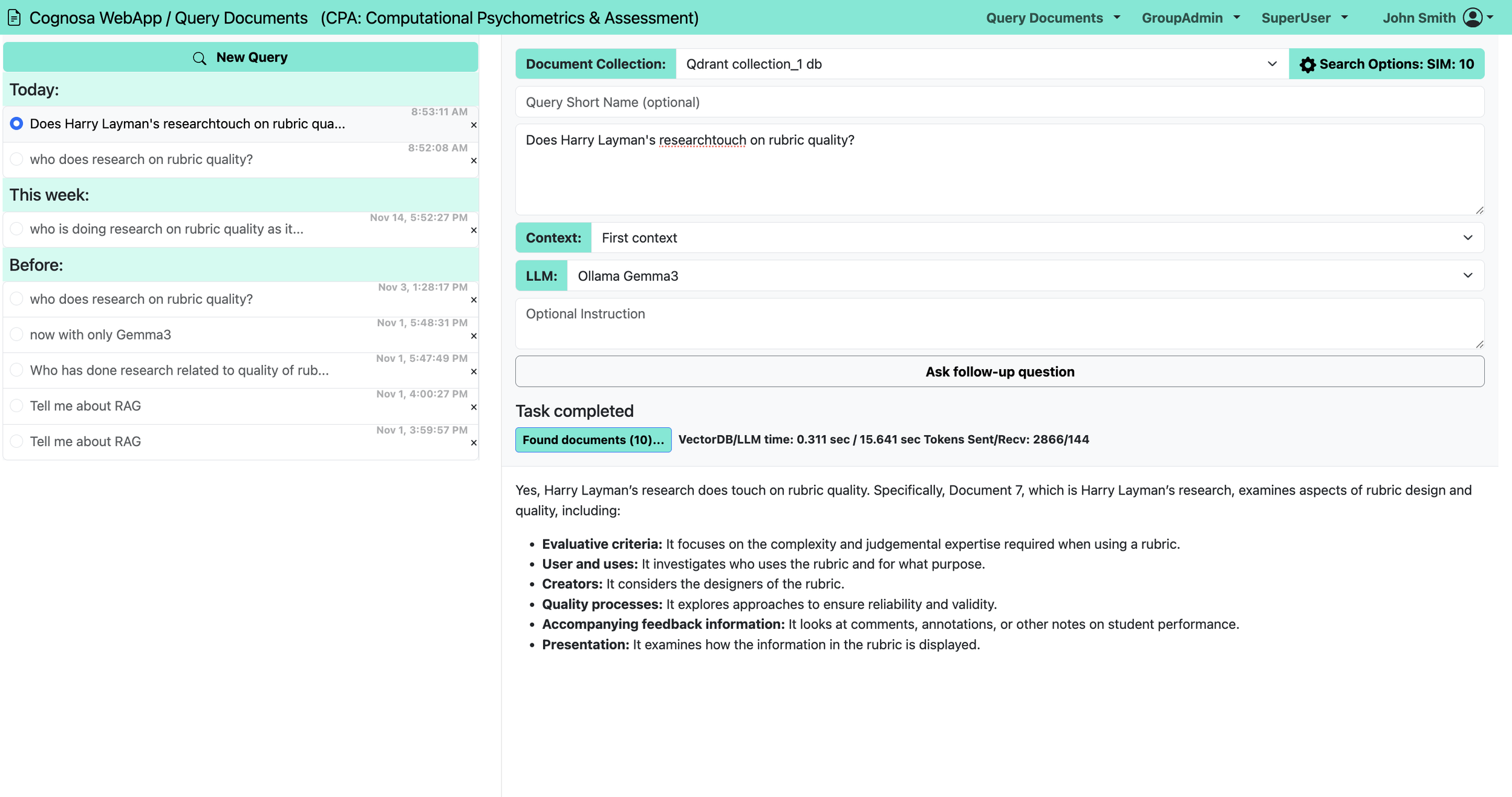

The figures below shows how Cognosa displays both types of queries:

a) direct questions to an LLM, and

b) augmented questions answered using your organization’s specialized data collections.

Query without context:

Query with context: (1 of 3)

Query with context: (2 of 3)

Query with context: (3 of 3)

Cognosa solutions v. Custom GPTs

One reason we created the Cognosa platform was that while some customers had quick success using Custom GPTs in OpenAI’s ecosystem, over time they found that the no-code approach could not answer certain queries reliably, could not scale to support larger or more complex datasets, or became very expensive at higher usage levels.

This post compares OpenAI’s GPT Builder — how it works, what it does well, and where it differs from a purpose-built Retrieval-Augmented Generation (RAG) solution like Cognosa.

Setting the stage: Custom GPTs vs. a purpose-built RAG solution

Many organizations experimenting with AI start by testing Custom GPTs inside the OpenAI ecosystem. It’s an appealing option: upload some files, add instructions, and instantly get a conversational assistant that seems aware of your documents.

But the simplicity of a Custom GPT comes with inherent limitations. It offers convenience, not full control.

A purpose-built RAG solution — like the one Cognosa provides — takes a different approach. Your data, structure, semantics, indexing strategy, and retrieval logic become first-class components, giving you transparency, customization, and scalability that a generic builder cannot match.

Before jumping to the side-by-side comparison, it helps to understand what Custom GPTs actually do — and just as important, what they don’t.

What a Custom GPT Does Provide

Same base model, customized behavior

A Custom GPT uses the same underlying LLM that powers ChatGPT. You supply instructions and files, but you do not retrain or fine-tune the model’s weights.

Knowledge file uploads

You can upload PDFs, spreadsheets, or text files so the GPT can reference them during a conversation — e.g., “Use these spec sheets when answering product questions.”

Custom instructions

You define how the GPT should behave, how it should use its knowledge files, and what persona or style it should adopt.

Optional tools, actions, and APIs

You can enable external API calls, web browsing, or code execution to extend the assistant’s capabilities.

Internal retrieval from uploaded files

At runtime, the GPT can draw from your uploaded files for additional context. They serve as an internal reference set, not as training data.

No use of your data for public training

OpenAI states that Custom GPTs are not used to train or improve OpenAI’s public models

What a Custom GPT does not (or at least not clearly) do

It is not fine-tuning.

Uploading files does not train new model weights. The GPT uses your files as additional context, not as learned parameters.

It does not replace a full RAG architecture.

You cannot control chunking, embeddings, vector indexes, or retrieval pipelines. The internal mechanics aren’t exposed and may not resemble a traditional RAG pipeline.

It still inherits normal LLM limitations.

Hallucinations, context-window limits, and confusion between similar content can still occur without careful structuring.

It does not permanently embed your data

The GPT uses the base model’s tokenizer and inference engine. Your “knowledge files” act as temporary reference material, not integrated training data.

RAG vs Custom GPT — how they compare

Below is a side-by-side summary of how Cognosa’s RAG-first approach compares with OpenAI’s Custom GPT builder.

| Solution Area | Cognosa / RAG Approach | Custom GPT (OpenAI) |

|---|---|---|

| Data ingestion & indexing | Purpose-built vector database, tuned chunking, overlaps, similarity thresholds, and retrieval pipelines designed for your data and query patterns. | Upload files within limits; generic ingestion and chunking with minimal visibility or control. |

| Granular control over retrieval | Full control of embeddings, vector size, retrieval type, chunk size, and parameters. Tuned during customer validation. | Only files + instructions; embedding and retrieval logic remain opaque. |

| LLM usage | Use open-source or commercial LLMs; deploy in our cloud, your cloud, or on-prem. Open-source avoids per-token fees. | Tied to OpenAI’s API pricing; enterprise costs can be significant. |

| Model fine-tuning | Optional fine-tuning of open-source models; or use smaller models when retrieval matters more than reasoning. | No fine-tuning; behavior controlled only through prompts and context. |

| Latency & maintenance | Performance based on chosen infra; small 24×7 workloads run for low hundreds/month. | Minimal ops overhead; OpenAI manages everything. |

| Traceability & control | Transparent retrieval with logs, auditing, and explicit control over data access. | Opaque retrieval; limited insight into contributing content. |

| Setup speed | RAG is complex to build alone, but Cognosa provides a turnkey managed setup. | Very fast: upload files + instructions and begin testing. |

| Operational cost | Predictable: setup + monthly cost based on hosting, model, and usage. | Ongoing OpenAI token charges; low barrier to start, expensive at scale. |

Next time, we’ll take an advanced look at some of how this magic works: the actual UI that gives advanced users access to alternative retrieval strategies and parameters!

Cognosa in the Spotlight: Why AI-as-a-Service Might Be Right for Your Business

It all begins with an idea.

Intro

Every few years, a technology shift quietly changes how work gets done.

AI-driven automation—especially Retrieval-Augmented Generation (RAG)—is one of those shifts. Yet many organizations aren’t benefiting from it. Not because the technology isn’t ready, but because their own data isn’t being used to make AI truly useful.

That’s the gap CogWrite Semantic Technologies built Cognosa to close.

Welcome to Cognosa in the Spotlight, a new blog series that explains AI-as-a-Service in practical, business-friendly terms—and shows how Cognosa will soon help small and mid-sized organizations put their own knowledge to work.

Why generic AI isn’t enough for real business tasks

ChatGPT-style assistants are impressive, but they do not:

know your company policies

know your products or parts inventory

understand your compliance rules

reflect your training materials

capture years of institutional knowledge or workflows

Without your specialized data, a general-purpose model can only give general-purpose answers.

What changes everything is AI that’s augmented with your private, domain-specific knowledge.

The power of AI + your proprietary data (RAG)

Retrieval-Augmented Generation (RAG) lets an AI system draw on your actual documents, procedures, manuals, specifications, and knowledge bases—while keeping that data private and secure.

This unlocks practical, high-value use cases such as:

Customer service augmentation – Answer customer questions from your real documentation, accurately, 24/7.

Compliance guidance – Give staff precise, policy-correct answers in regulated environments.

Technical support & troubleshooting – Integrate repair manuals, parts catalogs, service history, and SOPs.

Employee onboarding & training – Help new hires ramp faster with an “expert in chat form.”

Knowledge management & internal search – Replace clunky portals with natural-language answers.

In short: RAG turns your company’s documents into an always-available expert.

Why “AI-as-a-Service” matters

Most businesses don’t want to build and maintain their own:

vector databases

embedding pipelines

content “chunking” and indexing

API orchestration layers

prompt templates

monitoring and feedback loops

They do want the benefits.

AI-as-a-Service means you get the capability without the infrastructure, engineering overhead, or specialized in-house team. Cognosa’s mission is to make this accessible, reliable, and turnkey.

Where Cognosa is today—and what this blog will cover

CogWrite Semantic Technologies is approximately two months away from onboarding new beta users for Cognosa, our AI-as-a-Service platform.

Over the coming weeks, Cognosa in the Spotlight will explore:

what problems AI can realistically solve

how RAG works under the hood

how businesses can prepare their data

where AI provides immediate ROI—and where caution is warranted

practical examples of real-world solutions

how small and medium-sized teams can deploy AI effectively

Our goal is simple:

Help you identify the points in your workflow where an AI assistant—enhanced with your proprietary knowledge—can save time, reduce cost, and improve accuracy.

In future posts, we’ll walk through real-world solutions made possible by platforms like Cognosa and show how they can be implemented cost-effectively—without spending hundreds of thousands of dollars or paying $15,000/month for enterprise LLM access.